On The Lack of Video Processing Delay

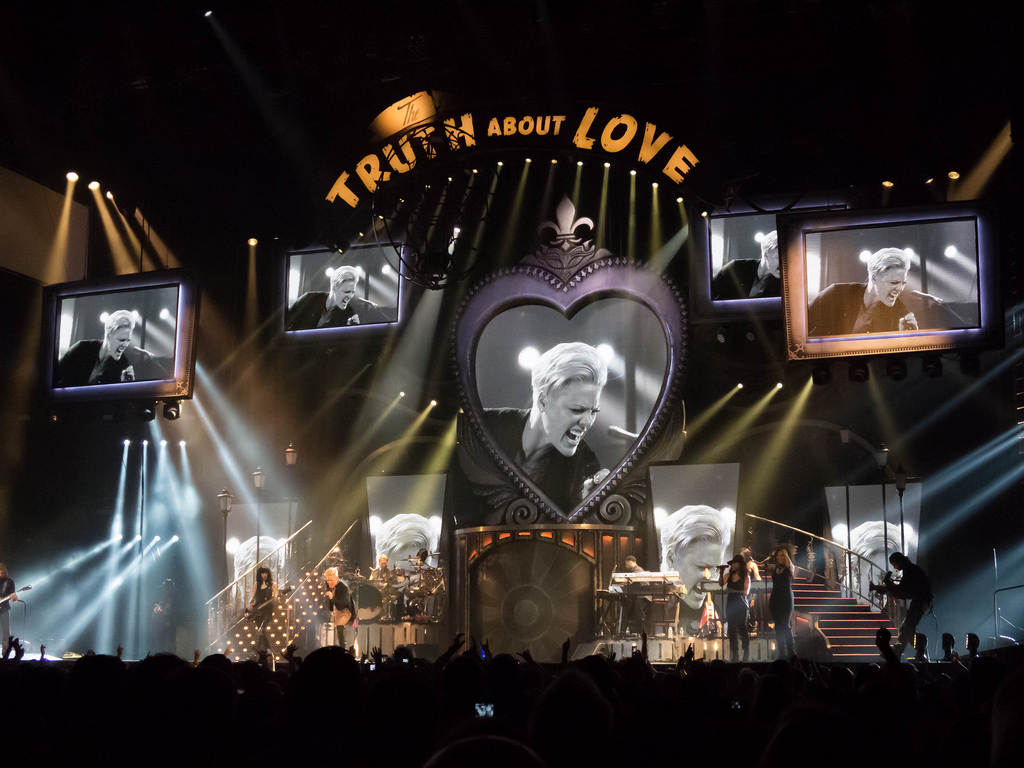

I recently (as of this writing, though probably not as of when you’re reading this!) went to a Pink concert at Staples Center in L.A., and was impressed by multiple things. First, the sound quality was actually good. Here in San Diego, the Sports Arena where concerts of this type are held has horrible acoustics, so actually hearing a rock concert was a nice treat. Second, the production quality was impressive. The whole show was exquisitely choreographed, with numerous set changes and way more acrobatics than I had expected (check out this video that somebody else took at the show to see what I mean). Third, the processing delay for the visual effects was impressive, and by that I mean virtually imperceptible. That third one really grabbed my attention.

At Cirrascale, we have a few customers who deal with live event-based material, but it’s not usually delay sensitive. These customers do things like record live events for later playback or analysis (so practically any delay is tolerable so long as the data eventually makes it to disk) or convert live events into streams that can be viewed online (presumably by people not simultaneously watching the actual event!). In these scenarios, an acceptable lag between when something happens in real life, and when it is done being processed (encoded, saved, transmitted, or whatever) is usually measured in seconds, if not minutes. This is markedly different from processing material that is included in a live event.

When I was working at Commodore (and to be honest, even before that, since I liked to geek out on this stuff), I often used the NewTek Video Toaster to create product and technology demonstrations, and worked with a lot of people and companies that were using it for live events. While the Video Toaster could do really cool stuff, one of the things that wasn’t so cool was the processing delay it introduced to the video stream. The workflow had a lot of analog elements to it, so there was very little delay introduced by the cameras recording an event or the monitors displaying the output; most of the delay was due to the processing of the live video stream. A standard definition NTSC stream (roughly 720×480 pixels at just under 60 fields per second) passing through the Video Toaster and a Time Base Corrector could be delayed by amounts approaching 100ms, as you can sort of see in this demo. Keep in mind that the effects being performed were also relatively simple, such as a crude scaling of the video, or altering the chroma or luminance to give false color, posterization, or some other all-over effect on the video frames.

Contrast that to the Pink concert. Many times the performance included projecting the the singers face or body onto multiple large screens behind the stage, so the audience would see Pink dancing and singing (or doing acrobatics!) in front of a larger version of herself on multiple, different sized screens around the stage.

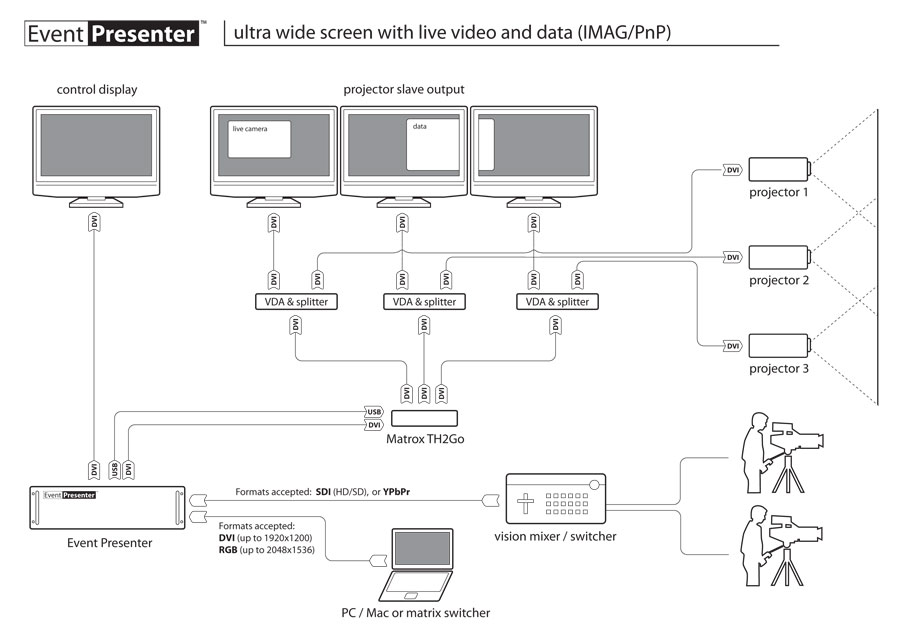

It’s a common thing to do at concerts, award shows, and other performances, but makes any delay in the video chain very obvious. I’ve got almost no clue what a setup is that would be used for a theatrical performance like the concert I went to, but conceptually it’s far more complex than what I dealt with on the Video Toaster. Products like Event Presenter and Tricaster show some seemingly simplistic workflows, which seem more like what you’d have at a small event.

Extrapolating from there, I imagine it’s something like capturing the image or images from cameras, applying some effects filters to them, rendering those images to multiple geometries (since there are multiple screens on the stage), and then decimating that image into smaller pieces (I’m guessing each screen on the stage is really a number of smaller screens that are color-matched and stitched together) for display on the final display devices. Even if the images being handled are the equivalent of 480p, that’s a lot of data to process and transport! Assuming that the video is manipulated in an uncompressed format at around 16bpp (it’s likely really 24 or 32bpp, but I like to be cautious), we’re talking tens of gigabytes-per-second of data manipulation going on.

If my quick Googling is to be believed, all of that data movement and processing needs to be done in under 20ms to be viewed as happening in sync with the actual performance. There are obviously products that can handle that, such as the NewTek Tricaster (the spiritual successor to the aforementioned Video Toaster) and the Showkube KShow, but even there a scan of the Tricaster support forum has users complaining of 7-frame and even 3-frame delays…so we’re back to the 100ms range again, albeit with some pretty cool functionality. Whatever was being used at the Pink concert must have been way beefier than those products!

Of course, while trying to satisfy my curiosity about how this low delay production was actually done, I realized that it could be just a viewing effect. Staples Center’s floor is roughly the size of a hockey rink, and my seat was about 2/3rds of a rink-distance away.

That 134 feet means sound is delayed from the stage by just over 110ms. This assumes I’m hearing the sound projected from the stage, which is likely not the case, but let’s pretend it is. The net result is that the processing delay may not have been reduced since the Video Toaster days, but the capabilities certainly have been.

As Moore’s Law pointed out, technological innovations continue to happen at a rapid pace. The growing capabilities of products and technology I get to work with here at Cirrascale continually wows me, and seeing even simple things like “delay-less” video effects during a live performance is just yet another reminder that advancements can manifest themselves in many ways. In my day-to-day work it’s faster processors, more complex GPUs, and I/O devices with lower latency and higher throughput that solve problems related to HPC and storage applications. It’s interesting and refreshing to see advancements in other areas when out and about in my daily life, and knowing that things I work with every day are helping make that possible.

Pingback: Ian Hardy

Very though provoking post…

I did a lot of video work early on the Amiga as well and then more recently at Swarmcast where we were enabling high def streaming over best effort bandwidth by slicing and dicing the streams. One thing when talking about audio and video is understanding latency versus jitter versus synchronization. In latency there is processing versus propagation latency. Propagation is the minimum time that the signal can transit the media given the distance, processing delay, is well, processing delay. As noted in most non-live event applications latency is almost irrelevant – the latency of a satellite fed video stream can approach several whole seconds for instance (and less than a 1/2 second of that is propagation delay as the round trip is only ~50000 miles). Jitter on the other hand is the variation in delay. Our brain will correct for latency up to some threshold (as 20-50ms are typical for video, 10-20ms for audio) but will notice jitter at a much lower threshold. We also have a brain that does lots of visual processing – so people’s brains will gloss over things like a video gap of up to 30-50ms (this is why movie projection worked). Our hearing has much less processing, so we will notice pops and clicks as short as 3-5ms. Last is the issue of synchronization. In virtually all systems (especially now with digital processing) the audio and video takes a completely different path through the system. Even basic tasks like playback of a movie on a PC represent real challenges to keep the audio and video synchronized.

In a modern system a video and audio signal typically gets “packetized” several times (2-3 at least…maybe IP on a network, and newer digital video signaling like HDMI and DisplayPort are packetized). Every round of packetization will introduce some additional latency – quite a bit if the device isn’t using cut-through transmit and receive (meaning able to start to process or transmit the data without having to buffer a full packet).

So not really driving to any point other than it is indeed very very hard to provide a really good mixed media transport of a media stream. An interesting question would be for live events would there be enough of a market for more specialized gear with low latency and tight synchronization. Can anybody thing of other applications with similar needs? Maybe audio and/or video in a multi-display situation like the office, retail or residential? I can notice differences in latency even with things like a football game on two TVs on separate set top boxes. Example – I have noticed the DirectsTV non-DVR boxes have about 1/8-1/4 second less latency than the DVR box. Having the same thing on two TVs 1/4 offset can be pretty jarring on the audio in the house. Interesting thinking.

Your jitter comment is indeed key, however you overcome jitter by either dropping frames (eew) or adding buffers to overcome the jitter, which in turn induces latency, which leads to all the ugly things you mention.

I, too, am driven crazy by two audio sources slightly out of sync. The example that seems most crazy is when listening to HD radio in my car. In the case of poor HD signal (really “not enough packets in the buffer”) the radio mixes the HD and the analog audio together. Unfortunately many of the HD stations don’t have the HD and analog audio perfectly sync’ed, so you get this cacophony of the HD signal followed milliseconds later by the analog signal. It drives me nuts!

Of course, a more fun use is for something like Speech Jammer, which makes use of audio processing delays to really mess with your head.

I did a lot of small music events from set up to be the one performing, making me understand a little bit of what is going on behind the scenes… and it always amazes me seeing this massive events with high sound quality and lights. nothing is more annoying than seen a life event and noticing the lag. That was very interesting post, and way to trow the Sports Arena under the bus :-p

Really enjoyed this post. I was recently at a LMFAO/Black Eyed Peas concert and was mesmerized by the amount of “supportive” material used for it. By that I mean, lights, videos, smoke machines, etc. I found myself pondering the amount of technology needed to do that type of work, and even the behind the scenes programming as well. I didn’t put much thought in to the delay, but now you really have me thinking about it. Especially, with the content in your last two paragraphs. Hmmm.